It seems that Tryton could benefit from the recent advancements in Large Language Models like GPT-3/4 or LlaMa.

My proposal is not to bind Tryton to one specific implementation but provide the necessary abstractions to connect to these or other language models or other ML tools.

One idea on how to take advantage of these tools would be the following:

Add a button in the top menu bar of Tryton that allows users to add content. The user may write some text, drag & drop a file, or push a button to record some audio.

In case of audio, a speech recognition tool would be used to generate the text.

Files would be treated as URLs or converted into text (we should see what is the best option).

That content would be sent to a LLM asking it to return a JSON with the necessary actions to be executed by trytond and/or sao.

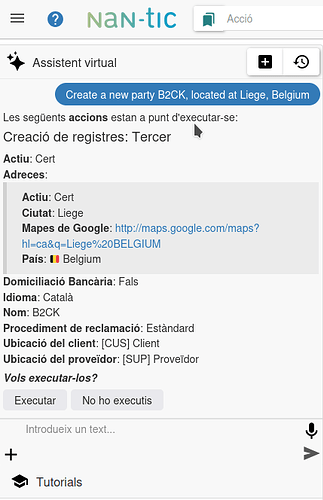

The inbox shown in sao, should be able to “explain” the user what actions will be executed and if the user agrees, then they would be executed.

For example, the following prompt in ChatGPT-4:

Given the prompt return a JSON with a list of actions with the following structure:

[{

'action': 'create|search|update',

'model': 'party.party|account.invoice|sale.sale|purchase.purchase',

'values': { # Shown only in create|update actions

{key: value},

}

]

Available fields for party.party are name, code.

Available fields for account.invoice are number, party, invoice_date, lines.

Available fields for sale.sale are reference, party, sale_date, lines.

Available fields for purchase.purchase are reference, party, purchsae_date, lines.

Here's the prompt:

Hi!

the purchase manager of Zara made an order (their reference is 2314) of 25 light bulbs model 42321 that should be delivered ASAP. Remember that they have never purchased yet.

returns:

Here's the JSON with a list of actions to create a new party and a new purchase:

[{

'action': 'create',

'model': 'party.party',

'values': {

'name': 'Zara',

'code': 'ZARA'

}

},

{

'action': 'create',

'model': 'purchase.purchase',

'values': {

'reference': 'Compra_Zara_2314',

'party': 'Zara',

'purchase_date': '2023-03-21',

'lines': [

{'product': 'light bulbs model 42321', 'quantity': 25}

]

}

}]

So given that the LLM does most of the work by itself I think Tryton should provide an integrated interface, the JSON that must be passed to the LLM so it can learn the correct output format and options, as well as a mechanism to process that JSON so new modules can “plug-in” new possibilities.

My opinion is that some actions could be executed by the server (one may be interested in supplying that kind of information using webservices) but other actions should be executed by sao itself. For example, consider the case that the text provided by the user contains the necessary information to create an invoice except for the name of the party, or they have to choose between a couple of them before the rest of the invoice can be saved.

What’s your opinion on the kind of integration Tryton should provide if any?