I did some recherche and testing on this topic. I also discovered a new project „ldtp“.

-

dogtail

- written in Python

- could be used for the Tryton desktop client

- uses the accessibility interface (atspi, Assistive Technology Service Provider Interface), thus it should work for Wayland, too

- allows scripting the GTK client. It works on a KDE desktop as well. Installation via pip (requires pyatspi distro-package python3-pyatspi, which is not listed as dependency)

- Not sure whether it is X11 only since it requires some xinit interface.

- One can „click” or „focus’‘ elements based on its „name“, „description“, „role“ (eg. ‚page tab‘) and „id” — although the id is never set for the Tryton client.

- There are two APIs: a „focus“-oriented and a „node“ oriented. The former seems more elegant, but misses some actions like double-click.

- development seems stalled, anyhow there seem to be quite some users

- An issue about publishing v0.9.10 on PyPI is still open while this version is on PyPI since four years already.

- Version 0.9.11 was tagged three years ago, but this version is still not in PyPI. (resp.ticket exists)

- Some sibling projects use dogtail for behavior driven testing, e.g. dogtail / zenity · GitLab

-

ldtp2

- written in Python

- could be used for the Tryton desktop client

- Claims to be platform independent: Linux, Windows, MacOS

- I did not test it, since it seems to use a client-server architecture and thus be more complicated to setup than dogtail.

- API looks much like he one for dogtail.

- Project status is not clear, see Status of project and repository unclear · Issue #59 · ldtp/ldtp2 · GitHub for details.

- Releases seem to not yet support Python 3 — which is a deal-breaker

-

xdotool

- written in C

- could be used for the Tryton desktop client and the Web client

- X11 only. will most probably break on wayland.

- emulates key-presses and mouse-moves, window manipulation

- For executing an action (e.g. click on a button or into a text-box) one needs to know the screen position of the element in pixels. This is very low-level. We could leverage this by adding some abstraction layer, which knows about the position and sizes of elements. Anyhow, this seems cumbersome.

- I did not test it (due to the last point)

-

testcafe

- written in Javascript

- could be used for the web client

- Nodes are selected using CSS selectors and selcetor functions.

- actions are performed on the selected nodes.

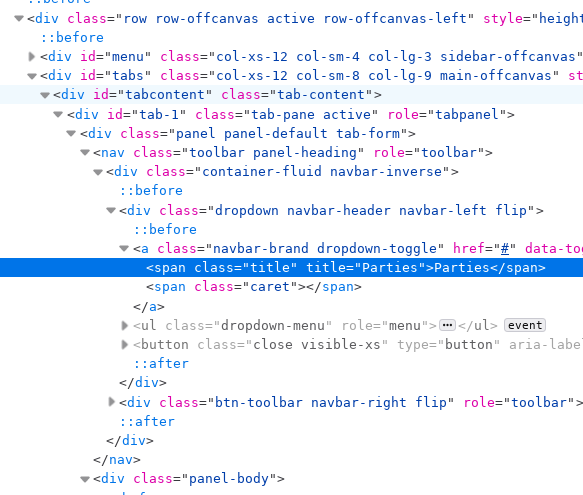

- Due to the structure of the HTML in the Tryton Web UI (see image), we would need to implement selector functions to select e.g. „the ‚New‘ button in the toolbar of the tab panel ‚Parties‘“

- I did not test it since I dislike Javascript, though this might be the way to create videos for the Web UI

text-to-speech options

- say.js mentioned in the original post

- written in Javascript, to be used with testcafe — at least this is the idea

- For Linux: uses the „Festival“ speech engine, does not support exporting the files (thus no caching)

Festival seems to support English only — which is deal-breaker IMHO - For Windows: uses the Windows SAPI.SpVoice API

- For Mac: Uses the Mac tool

say

For Python, there is no package like says.js AFAIK. Anyhow, this can be implemented using a TTS package and some play-back module, eventually caching the synthesized speech. (And indeed I already implemented this)

- TTS— offline

Uses language models by Coqui. Examples for English sound very good, same for the few tests I made for German. HUGE, since all offline. - pyttx/pyttx3 — offline

Uses the operating-system’s mechanisms to generate speech (Windows: sapi5, Mac: nsss - NSSpeechSynthesizer, other: espeak — espeak is lousy AFAIK). Allows setting rate, voice, volume. - gTTS — online

Used the Google text-to-speech API. Seems to have only one voice per language. (German voice is a bit slow. Both German and English sound a bit tinny.) I also tested other German voices Supported voices and languages | Cloud Text-to-Speech API | Google Cloud, all somewhat tinny. - google-tts — online

Another package for Google TTS. Seems to allow setting rate, voice, output-encoding, etc. - ttw-wrapper — online or offline depending on used engine/service

Wrapper for several TTS engines: AWS Polly, Google, Microsoft, IBM Watson, PicoTTS, SAPI - tts-watson — online

API for accessing the IBM Watson TTS. Requires an API key, so I did not try it. (Registration and service seems to be free of charge) - nemo-tts — NVIDIA Neural Modules, package seems a bit outdated

Unsorted findings:

- RHvoice project — seems to focus on eastern European languages

- sanskrit-tts — python module

- baidu-tts — python module

- liepa-ttts — python module, Lithuanian language synthesizer from LIEPA project

- PyPI list a lot more modules I did not investigate

Links:

- IBM Watson service: IBM Cloud Docs

- AWS Polly: Amazon Polly

- Google: Text-to-Speech documentation | Cloud Text-to-Speech API | Google Cloud

- Microsoft Cortana: Text to speech API reference (REST) - Speech service - Azure AI services | Microsoft Learn (old link)

And for play-back:

- playsound — Python

could be used to play the exported sound files.